Write live video to mp4 file:

With sound:ffmpeg -f video4linux2 -s 320x240 -i /dev/video0 test.mp4

Write to a raw .264 fileffmpeg -f video4linux2 -s 320x240 -i /dev/video0 -f alsa -i hw:0 -f mp4 test3.mp4

With access unit delimitersffmpeg -f video4linux2 -s 320x240 -i /dev/video0 -vcodec libx264 -f h264 test.264

Convert raw .264 to mp4ffmpeg -f video4linux2 -s cif -i /dev/video0 -x264opts slice-max-size=1400:vbv-maxrate=512:vbv-bufsize=200:fps=15:aud=1 -f mp4 -vcodec libx264 -f h264 rtp_aud_.264

Pipe into another processffmpeg -i test.264 test_convert.mp4

Send over RTP and generate SDP on std out:ffmpeg -f video4linux2 -s cif -i /dev/video0 -x264opts slice-max-size=1400:vbv-maxrate=512:vbv-bufsize=200:fps=15 -f mp4 -vcodec libx264 -f h264 pipe:1 | ./pipe_test

ffmpeg -f video4linux2 -s cif -i /dev/video0 -x264opts slice-max-size=1400:vbv-maxrate=512:vbv-bufsize=200:fps=15 -f mp4 -vcodec libx264 -f rtp rtp://127.0.0.1:49170

Generates:Low Delay RTP:

v=0 o=- 0 0 IN IP4 127.0.0.1 s=No Name c=IN IP4 127.0.0.1 t=0 0 a=tool:libavformat 54.17.100 m=video 49170 RTP/AVP 96 a=rtpmap:96 H264/90000 a=fmtp:96 packetization-mode=1

Can be played with (where test.sdp should include the generated SDP):ffmpeg -f video4linux2 -s cif -i /dev/video0 -x264opts slice-max-size=1400:vbv-maxrate=100:vbv-bufsize=200:fps=25 -f mp4 -vcodec libx264 -tune zerolatency -f rtp rtp:127.0.0.1:49170

Using VLC to stream over RTP AND display live stream:./vlc -vvv test.sdp

./vlc -vvv v4l2:///dev/video0 :v4l2-standard= :v4l2-dev=/dev/video0 --v4l2-width=352 --v4l2-height=288 --sout-x264-tune zerolatency --sout-x264-bframes 0 --sout-x264-aud --sout-x264-vbv-maxrate=1000 --sout-x264-vbv-bufsize=512 --sout-x264-slice-max-size=1460 --sout '#duplicate{dst="transcode{vcodec=h264,vb=384,scale=0.75}:rtp{dst=130.149.228.93,port=49170}",dst=display}'

However this does not seem to generate the SPSs and PPSs.Edit:

--sout-x264-options=repeat-headers is necessary to repeat SPS and PPS in stream.

Low delay capture with VLC:

./vlc --live-caching 0 --sout-rtp-caching 0 -vvv v4l2:///dev/video1 :v4l2-standard= :v4l2-dev=/dev/video0 --v4l2-width=352 --v4l2-height=288 --sout-x264-tune zerolatency --sout-x264-bframes 0 --sout-x264-options repeat-headers=1 --sout-x264-aud --sout-x264-vbv-maxrate=1000 --sout-x264-vbv-bufsize=512 --sout-x264-slice-max-size=1460 --sout '#duplicate{dst="transcode{vcodec=h264,vb=384,scale=0.75}:rtp{dst=130.149.228.93,port=49170}",dst=display}'

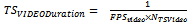

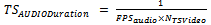

is given by

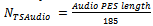

is given by